Common Causes of Web Store Performance Problems

Caution: this content was last updated over 4 years ago

If you’re unsure what’s causing your web store to slow down, or if you want a primer at some of the most common problems we encounter, then this page is for you. In this article, we have highlighted nine key areas to consider as well as some quick tips to point you in the right direction.

1. Content Delivery Network (CDN)

Slowness can be caused by not using a CDN, or by using one incorrectly.

A CDN replicates and stores (caches) files stored on an origin server (eg NetSuite) and makes them available around the world. The prime benefit of a CDN is that it makes server responses a lot faster as they are:

- More likely to be geographically closer to the user than the origin server (lower latency)

- Less likely to be as busy as the origin servers (load-balanced)

- Set up to store and serve copies of files and data that have already been generated (so there are significantly lower computational costs)

If everything is set up correctly, then you may want to check your CDN because there could be issues with their network. For example, if a particular node is down then you or your users could be being routed to a server further away than normal.

If you haven’t turned your CDN on then you should see the problem: everyone is trying to access the NetSuite application for the resources, so it’s like everyone rushing to use a single, central resource rather than many distributed resources; this causes bottlenecks.

The final point is similar: if there are ‘cache misses’, which means that the CDN doesn’t have a copy of the resource the user has requested, then they are sent to the application directly to get the resource, and this can be time-consuming. If you’ve just cleared the cache, added a number of new items or features to your site, then cache misses will occur until a copy of all the resources can be generated by the application and stored on the CDN. This can also happen if the cached resources go out of date, as cached resources are only stored for a set period of time.

A final problem that may be happening, and causing problems, could be DNS problems. For that, we have an article on Troubleshoot DNS Issues with the CDN.

Testing if the CDN is Working (And How to Enable It)

However, from a performance point of view, the most common issue our performance engineers see is that the CDN is not working at all, which means that we’re not providing the fastest response to visitors.

The first step is to check whether you’ve turned on the CDN. Yes, one of the most common problems is that developers forget to turn on the CDN after development has finished.

When you’re developing a site, it is common to deactivate caching because you are making frequent updates to the site and want to avoid old files being served (or having to constantly invalidate the cache). It’s easy, therefore, to forget to turn it on when the site goes live and begins trading.

See our documentation for information on how to Enable CDN Caching.

If it is enabled but still appears to be malfunctioning, then you can test it using the CLI tools of dig (Unix) or nslookup (Windows) like this:

# Unix

dig IN A www.example.com

# Windows

nslookup -class=IN -querytype=A www.example.com

There are also web services that can do this for you online.

When you run one of those commands, you should see your site’s domain resolve to the CDN provider, eg Akamai:

;QUESTION

webstore.example.com. IN A

;ANSWER

webstore.example.com. 14399 IN CNAME webstore.example.com.hosting.netsuite.com.

webstore.example.com.hosting.netsuite.com. 299 IN CNAME webstore.example.com.e87389.ctstdrv1234567.hosting.netsuite.com.edgekey.net.

webstore.example.com.e87389.ctstdrv1234567.hosting.netsuite.com.edgekey.net. 21599 IN CNAME e35026.x.akamaiedge.net.

e35026.x.akamaiedge.net. 19 IN A 2.22.31.144

If it’s not enabled, you’ll see the A record resolve straight to a NetSuite server IP address:

;QUESTION

webstore.example.com. IN A

;ANSWER

webstore.example.com. 299 IN A 212.25.248.17

If you are performing a test, make sure that you are looking up the correct domain for your web store. For example example.com and www.example.com are completely different domains, and only one might be used for your web store.

2. SEO Page Generator and Time To First Byte (TTFB)

Part of the architecture behind your site’s frontend is the SEO page generator. One of its primary uses is to generate static fully-rendered versions of your site’s pages so that they can be crawled by search engines bots, who might not be able to process JavaScript on the fly.

TTFB is a performance metric that measures the delta between a browser requesting content and starting to receive it. In simple terms, the web browser goes through the following process:

- Look up domains using DNS

- Make a TCP connection

- Negotiate security via TLS/SSL

- Send a request for content

- Wait for content (while timing TTFB)

- Begin receiving content

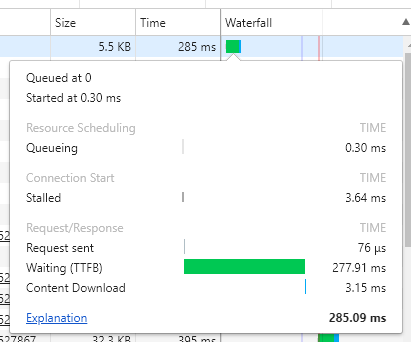

Your browser’s developer tools can be used to measure TTFB, eg:

Accordingly, the producers of those tools will have documentation on troubleshooting TTFB performance problems using their tools.

However, the page generator can be used as a tool to aid you in debugging. Using its debug and log mode, you can break down big requests for resources into smaller ones, potentially giving you greater detail than the browser tools can give you.

If you do this, keep an eye out for the following things (as they can impact TTFB):

- Sub-requests that are measured in 1000s of milliseconds rather than 100s

- Any unusual status codes, in particular 301s and 302s (redirects), 404s (not found errors), and 500s, 502s and 503s, (server errors)

- Unnecessary service calls

If any of these are present, they can give you a clue as to where to look for performance problems.

3. Image Optimization

I won’t go into too much detail about this here because we already cover this in great detail in the Help Center; see Manage and Maintain Images).

The short version is that when comes to the total page weight, images are quite often the single biggest contributor. There are plenty of ways to optimize images, including increasing their compression to reduce each image’s size and reducing the number of images / HTTP requests on a page.

4. Page Rendering

Page rendering performance refers to a different kind of performance: perceived performance. If you improve a page’s perceived performance then you are doing things that gives the impression to the user that the page is loaded and ready to use. Often, the perception of good performance is just as important as the actual performance.

There are a number of tools that can help test for perceived performance. They work by taking screenshots at set intervals (eg every 100ms) to build up a film strip that you can go through and see how long it takes for a ‘meaningful’ paint. Check it out in your web browser’s developer tools, or perhaps a service such as WebPagetest.

Common things that cause perceived performance problems:

- Custom fonts

- Slow, blocking (synchronous) requests

- Calls to third-party scripts

It’s not uncommon for sites to implement many tracking tags (eg for analytics or ads). We must advise caution when doing so: they can have a massive impact on performance, particularly if they have not been implemented properly. We would certainly recommend using Google Tag Manager wherever possible, as it will offer improved performance to implementing each one individually.

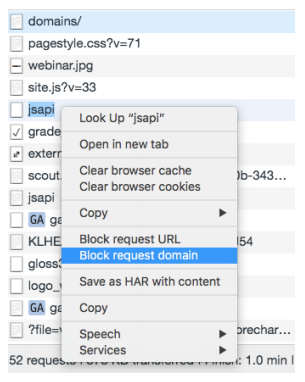

If you want to test their impact, you can use your browser’s developer tools to block requests to specific domains. For example, in Chrome, you can right-click on a particular request in the Network tab and choose Block request domain — after a refresh, all attempts to reach that domain will be automatically prevented. If your site loads particularly quickly after this, you may have found a culprit.

5. Item Search API

Almost all item data you see on your site is requested through the items search API. When a query is made, our search service goes through your site’s index and finds out which items should be returned, then it performs a database query to return those items. Accordingly, there will be a performance cost as it is processed and the data returned.

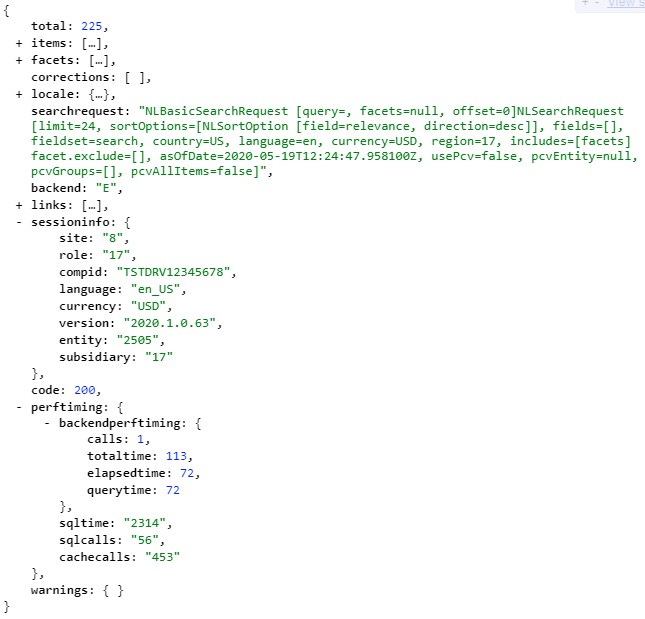

If you suspect that there could be performance problems with it, you can get the request URL (eg in the Network tab in your browser’s developer tools) and then attach ssdebug=T as a parameter to the end of the URL. At the bottom of the results will be some additional JSON data such as searchrequest, which gives an abstraction of the request it processed, sessioninfo, which gives you information about your site, and, most importantly, perftiming, which gives you details about how quickly the response was generated.

If you have made the call before, you may add to on a unique parameter value to the end so it forces a cache-miss response (ie repeated calls for the exact same query will returned the cached version, so adding a parameter with a new, random value at the end will generate the same results but from the application).

In the screenshot above, you’ll note the perftiming:sqltiming value is 2314, which means the SQL query on the database took 2.314 seconds to complete. It’s hard to put an exact number on what a ‘concerning’ number is, but if it is significantly higher than this, and is causing noticeable performance problems, then you will need to think about your the queries you are submitting to the search service.

If your searches are taking particularly long, the most common cause is that you are requesting too much data back. Every field of data you want in your response comes with a cost, and so we always suggest that you keep your fields and field sets to the minimum required. For more information on this, see Field Set and Facet Field Performance.

If you think your field sets are lean, consider where you are using them. For example, some developers re-use field sets because they are ‘close enough’ to what they want and don’t want to create a second for one for their particular use case. Unfortunately, this can mean that the secondary page is unnecessarily slowed because while its original use may be fine on its own, the secondary place might have additional performance problems that add on top of it.

Consider also how you are using them. A classic example is stock info: you want to show if an item is in stock. The isinstock field is quite lightweight and is set up to return a boolean value; however, we have seen cases where developers have instead returned all stock information about the item and then performed complex logic calculations on that data to generate their own boolean value. Don’t bother! Use the system generated version!

Another common area we frequently encounter problems is on product list pages. The default field set for search is set up to cover a number of bases, and can be trimmed down by each developer for their own site. But sometimes, we see sites go in the opposite direction and add in additional fields for no discernible reason. Keep in mind that when it comes to search results and commerce category pages, you asking the API for a lot of data for each item, and the more items per page, the greater the performance cost.

Consider the following GIF, which takes a look at a hypothetical example of the fields returned per item, and then considers whether they are truly necessary.

6. Environment SSP Files

This only applies to SuiteCommerce Advanced sites. In this edition, developers can make modifications to the core files that run as soon as the applications start. They’re called shopping.user.environment.ssp and shopping.environment.ssp, and they are used to get important data and make them available to the rest of the application.

Importantly, the user environment file is designed for user-specific data and is not cached, whereas the generic environment file is.

We have seen some cheeky developers, who have decided to use these files rather lazily and ‘dump’ code in them because they needed it immediately at application start. While it can sometimes me legitimate to use these files to bootstrap data, we only recommended in rare cases (see Bootstrap Important Data at Application Load “Bootstrap Important Data at Application Load”). They can also be responsible for slowness: even when used correctly.

Some tips on using these files correctly follow:

shopping.user.environment.ssp:

- Remove non-user-specific information

- Check if there’s any unnecessary ‘keep fresh’ code (ie data that has been placed here because it is never cached)

- As this is a global file, you’ll probably notice the slowness across the entire web store (and that’s OK)

shopping.environment.ssp:

- Check for unnecessary bootstrapped data (ie, is it required — and is it required across the entire site?)

- Consider replacing the bootstrapped data with a service

- As this is where the site’s categories, CMS and configuration data is called, check to see if any of these are the culprits

7. Commerce Categories

Category data can be one of the biggest culprits — they can be quite intensive to generate and so you should approach their use with performance in mind.

General tips for these include:

- Use commerce categories — don’t try to shoehorn in the old, Site Builder categories functionality

- Don’t go deeper than three levels in your taxonomy

- Try to assign a product to only one category

Some people treat categories like they do keywords or tags: ie, ‘more is better’. Ideally, you want to do the opposite: categories are there to be neatly organized and easy to navigate through. If people keep seeing the same products appearing in the same places, they will get frustrated and leave. Remember, categories are meant to segment your catalog; repetition undermines that.

8. Scriptable Cart

Performance and best practices are mentioned in our documentation, so we will keep this short.

Scriptable cart scripts run on both web store and back office sales transactions, and are frequently used to perform additional actions and connect to third-party services. These actions are often blocking, which means that they cause the application to wait (and therefore the user) for it to complete before letting the operation to continue.

Depending on what your script is set up to do, that’s where you may end up seeing performance problem. For example, if your scriptable cart listens for changes to order line items, or when new ones are added, then you’ll likely see issues where adding items to the cart is slow. We’ve also seen a number of performance issues around the checkout wizard because of scriptable cart.

A common cause of slowness is that a script is running on the web store when it only needs to run in the back office context. You can perform some testing by going to Customization > Scripting > Scripted Records > Sales Order and look at all the scripts that run on it.

After picking a suspect, you can go to its deployment record and toggle the All Roles and All Employees checkboxes: if you check All Roles and uncheck All Employees, it’ll run in the web store; if you uncheck All Roles and check All Employees, it’ll run only in the backend. Note, though, that this is a bit hacky and isn’t a long-term solution: you need to figure out why the script is taking so long!

9. User Event Scripts

If there are issues with how long it takes to place an order then you may need to examine your user event scripts. There’s an option called Execute in Commerce Context on a script’s deployment record and it determines whether it will run on web stores — if you toggle this off and things improve, then this is likely where your problems lie.

There’s also a feature you can enable called Asynchronous afterSubmit Sales Order Processing they may help you if your code listens for the afterSubmit event. When this feature is enabled, afterSubmit user events are triggered asynchronously when a sales order is created during web store checkout, meaning that they will run in the background.

When an afterSubmit user event runs in asynchronous mode, the next page is loaded during the period of time the afterSubmit user event is still processing. You may find some joy if you switch to asynchronous event handling.

Code samples are licensed under the Universal Permissive License (UPL), and may rely on Third-Party Licenses