What Google's Core Web Vitals Update Means for Ecommerce SEO

In June 2021, Google will be changing their ranking algorithm to include page experience signals as ranking factors. Page experience signals include things like first-load performance, mobile-friendliness, security and safety. In short, Google wants people who use its search engine to visit pages that not only deliver the content that is relevant to their search but also provide a good user experience. Thus, when ranking pages that it considers equally relevant to a user’s search, it will prefer the one that gives a better user experience.

The point of this blog post is not to parrot what has already been said in Google’s documentation; rather, we will reference and point to it, so that you can read it yourself, and then analyze it through the lens of ecommerce so that we can see what it could mean for web store SEO.

Accordingly, we’ll look at:

- What’s going on with Google’s new algorithm change

- What tools, data and information Google is offering

- What the perspective is from the ecommerce industry as a whole

- What considerations and criticisms you should take into account when considering Google’s metrics and testing tools

Before we begin, we should preface this post by saying that we are talking about a third-party service and so we can only speculate on exactly what will be happening ‘behind the scenes’. Accordingly, the analysis that we provide in this post is based on what information is publicly available and our industry experience and expertise. If you have strong concerns, you are encouraged to seek additional information and professional advice.

Finally, if your web store does not depend on SEO / organic search traffic (for example, because your site’s inventory is behind a login wall), then this content will be largely irrelevant for you.

Timeline of Events

There are many resources available on the internet regarding the timeline of this change, but we recommend going straight to the source materials that Google have provided. We won’t delve too deeply into them here, and you’re encouraged to read them yourself.

In May 2020, Google wrote a blog post titled Evaluating Page Experience For a Better Web where they made the first announcement about the algorithm change. They mention previous announcements related to the change, such as the introduction of web vitals, and how industry research supports the position that users want sites that offer a great user experience.

Following up in November 2020, they published another blog where they laid out the timing of the upcoming changes. They initially set the date of May 2021.

Shortly after, in December 2020, they published an FAQ covering some key questions that came up within the community. A follow-up post was published in March 2021 with more questions.

Most recently, in April 2021, they pushed back the rollout to June 2021. What’s important about this final blog post is that they seek to assuage some doubts and fears about this change. Indeed, part of what this blog post tries to do is the same.

Context

While learning about this change, it is important to put it into context — not only in terms of other changes like it, but also Google in general. For example, it might be worthwhile to look into how search algorithms work and Google’s algorithm update history.

With nearly 90% of searches take place through Google, there is no doubt as to how dominant a player Google is in the search marketplace — no other search service can claim that their brand name has become the generic term for searching for content, for example.

This high level of trust that users put in Google for finding content has led to a sense that if a webpage is not in Google, then it does not exist on the internet. For web store owners, this cuts the other way: if people can’t find your site on Google, then you are at risk of missing out on potential visitors. Accordingly, optimizing your webpages for search engines is massively important and has created business for dedicated professionals and specialized organizations.

So, when Google say that they are introducing or prioritizing new ranking factors, it is in the interest of web stores to check how optimized they are for these factors and make any necessary improvements.

However, it is also important to keep in mind that there is always a significant amount of nuance and depth to changes like these. Principally, not all ranking signals are considered equal and that there are a lot of them – according to SEO experts Backlinko, Google has over 200 ranking factors.

In short, it is good to pay attention to these changes and take them into account, but your response should be proportional to the scale of the change.

Page Experience as Ranking Factors

Generally speaking, how good an experience a user has while on your website is subjective. However, if we know, for example, that users are more likely to abandon a site if pages take a long time to load, we can assume that the inverse must be true — faster sites are more likely to retain visitors. Therefore, one way we might test if a user is having a good experience, is to create and test a number of performance metrics when they visit.

Performance, however, is not the only thing Google is looking for. Another area they are interested in is mobile-friendliness: whether your site fits onto a small-screened device, and things like font sizes are appropriate for it. They are also interested in less abstract things, such as whether your site is served through HTTPS and you’re using a recognized security certificate issuer.

The metrics and checks fall into three categories:

- First-load page performance

- Mobile-friendliness

- Security and safety

Performance

If you’ve measured the performance of your site before, you’ll be aware of some existing metrics already, such as time to first byte (TTFB). Google has introduced their own metrics, centered around the user’s experience. There are seven that they call ‘web vitals’ — metrics that measure loading, interactivity and visual stability.

We won’t go into significant detail about these here, as they are covered extensively in Google’s documentation.

| Metric | Description | Core Web Vital |

|---|---|---|

| First Contentful Paint (FCP) | Typically just after TTFB, when the first content (text or images) has rendered | No |

| Largest Contentful Paint (LCP) | When the largest block of text or biggest image has rendered | Yes |

| Speed Index | How long it took for the visual parts of the page to render | No |

| Time to Interactive (TTI) | How long it took for the page to become ready to use | No |

| Total Blocking Time (TBT) | The sum of all the individual periods between FCP and TTI where the user had to wait | No |

| Cumulative Layout Shift (CLS) | How much the visible parts of the page moved as the page rendered | Yes |

| First Input Delay (FID) | How long after the page loaded before the user interacted with it | Yes |

Out of these seven, there are three ‘core’ web vitals (bolded in the table above): things that Google considers the most crucial for ensuring a good user experience. It is these three metrics that Google will be measuring when people visit your site and using to influence their ranking decisions.

After conducting research on these vitals, Google set benchmarks stratifying each into three levels: good, needs improvement, and poor. It is sites that get ‘good’ scores that will receive a boost in ranking.

Thus, we can abstract three priorities out of this:

- Get the largest part of the page to render as quickly as possible

- Ensure that the layout of the page is fixed, and doesn’t move about

- Cut down the total time it takes for the page to be ready

Mobile-Friendliness

Mobile-friendliness is something that Google has been promoting for a while. For example, in November 2016, Google announced a switch to mobile-first indexing as a reflection of how, they said, that the majority of their users were performing searches on mobile devices.

There are many aspects to mobile-friendliness, but includes things like:

- Having a responsive or dedicated mobile site

- Using mobile-specific HTML and CSS

- Ensuring the content fits on the screen

- Ensuring appropriate sizing is used, for example for text

- Ensuring clickable elements are sufficiently spaced out

Security and Safety

There are many things that a web site can do to ensure safety and security, but, perhaps, the four important things Google is interested in are:

- Encrypting the entire site with HTTPS

- Using a trusted domain and security certificate provider

- Putting no malicious or deceptive code in your JavaScript

- Showing no intrusive interstitials

When it comes to your web store, these are all quite straight-forward to achieve and should be available out of the box.

Testing a Webpage

In order to test many of these factors, Google has made a suite of tools available.

The main testing tool is the developer-focused Lighthouse, and includes all of the performance metrics mentioned above, as well as a number of other tests for mobile-friendliness and best practices.

However, a more user-friendly version is available through a number UIs:

- While in Google Chrome, via Developer Tools > Lighthouse

- PageSpeed Insights https://developers.google.com/speed/pagespeed/insights/

- Web.dev https://web.dev/measure/

Additional performance information is also available in Google Search Console, via Experience > Page Experience.

Finally:

- Mobile-Friendly Test: https://search.google.com/test/mobile-friendly

- Structured Data Testing Tool: https://search.google.com/structured-data/testing-tool

- PageSpeed Insights API: https://developers.google.com/speed/docs/insights/v5/get-started

PageSpeed Insights and Lighthouse

As most attention is being shone on performance, most people are using Lighthouse or wrappers for it, such as PageSpeed Insights (PSI).

The Lighthouse package was developed by Google and is open-source. Many development teams have started to work Lighthouse testing into their suite of tests, and businesses have sprung up that package it up into subscription plans — for example, by offering repeated/scheduled testing, data persistence, and analysis.

It is quite easy to get started with it and can be built into larger testing packages with tools like Gulp and Node. For example, you can tell it to run headlessly on a number of different URLs in the background, and then have it export the results into a JSON blob so it can be imported into a spreadsheet or analysis software.

However, for a lot of people, that level of setup is unnecessary — especially if you are new. In most cases, using PSI is the preferred choice because it’s already all set up for you to use:

- It is easy to use for both developers and non-technical folk

- It will include real user data if it is available

- Runs from Google’s servers so there is an assurance of stability and reliability

Going forward, we’ll focus more on PSI than Lighthouse, but will often use the names interchangeably as they refer fundamentally to the same thing.

How Does It Work?

If you wish, you can try testing a webpage now — it can be any publicly accessible page, not just for websites that you operate.

After entering a URL of a webpage you want to test, Lighthouse spins up an instance of Chrome and, simulating a real user, visits that page. During that time, it measures how long things take to load using all of the criteria above except for first input delay (as FID can only be measured with real users).

Once it has its metrics, it will perform a number of other tests (such as mobile-friendliness and code quality checks). After all the tests are complete, it will compare timings and metrics against its benchmarks and then produce scores based on them. A high-level general score is also computed, based on the weightings it gives to each one.

There are some options you can specify when using Lighthouse/PSI and the crucial one is ensuring that you select a mobile test.

Why mobile? Well, as Google is now doing mobile-first indexing, it is important that you use benchmarks for this form factor.

What difference does this make? Testing in ‘desktop’ mode will let Lighthouse run as-is, whereas specifying ‘mobile’ mode will apply constraints to the testing process:

- Use a small viewport

- Artificially throttle the network conditions to simulate a slow 4G connection by reducing bandwidth and increasing latency

- Artificially throttle computational performance by limiting the CPU to one quarter of its processing power

Naturally, running tests in slower, more constrained conditions produces harsher results. Computationally expensive rendering, such as those provided by websites with a lot of JavaScript, takes significantly longer on slower CPUs. As for network conditions, pages that have big payloads or make a lot of HTTP requests will find themselves held back by slower network conditions.

Understanding PageSpeed Insights Test Results

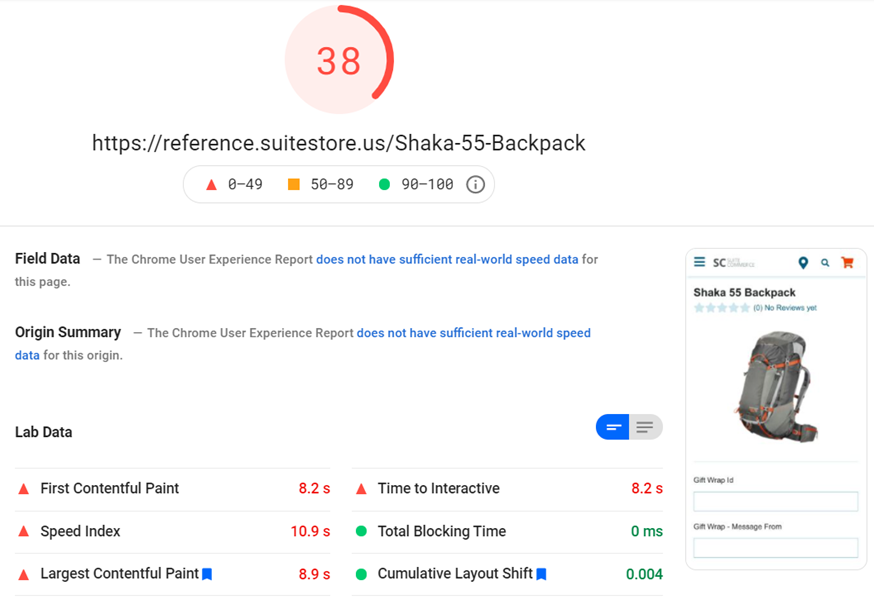

Let us say that you run a test for a page on the developer portal in PSI and select the mobile test results, this is the kind of page you might get back:

At the top of the page is an overall score; in this case it is 95, which, as the indicators below show, means that it is a good score. Below that are two sections, Field Data and Origin Summary — in our case, both are marked with text that reads “The Chrome User Experience Report does not have sufficient real-world speed data for this page”.

Below that are breakdowns of the various performance timings it tested for. In the case of this example test, all are considered good, except for LCP, which has been flagged as needing improvement.

Real User Data vs Artificial Lab Data

Alternatively, the Field Data and Origin Summary sections might have actually have data in them.

Essentially, in addition to showing you ‘lab’ data from its own ad hoc test, Google will also try to fetch collated data it’s collected from the Chrome User Experience Report service. This data comes from people who use Chrome and have opted into the service. Field Data refers to data from real users visiting this particular page, and Origin Summary refers to real user visits across the entire domain.

One thing that cannot be understated enough is that real user data is by far the most preferential data available for analyzing your site’s performance. Google has said it will only use real user data and has no plans to use data from artificial test results in its ranking decisions.

What Does It Mean if My Site Doesn’t Score More than 90?

Of course, the developer portal is a not a web store — so what happens if you test a URL, such as a product detail page on a web store?

In this test, the page scores 38 overall and has a number of metrics from the lab data showing as falling into the lower end of what’s considered acceptable.

What is going on? Why is there a big difference in scores? Well, it is important to understand the big differences in complexity between web stores and sites like the developer portal:

- The developer portal is a statically generated site serving reference material through simple HTML pages with very little JavaScript

- Web stores are complex JavaScript-powered web sites dynamically serving content, enabling ecommerce operations, and making multiple requests for data and images

In short: the features of a web store require heavy computing power and bandwidth, and reference sites do not.

So, does this mean that after the algorithm update this site’s SEO would be tanked?

The answer is no. To quote directly from Google’s Search Central documentation:

“While page experience is important, Google still seeks to rank pages with the best information overall, even if the page experience is subpar. Great page experience doesn’t override having great page content. However, in cases where there are many pages that may be similar in relevance, page experience can be much more important for visibility in Search.”

Relevant content is the single biggest ranking factor, and the page experience factors are one of many, many factors used by Google to sort results. If people are still searching for this backpack, this site’s webpage would likely still show up — but it might be higher or lower depending on favorably its experience scores measure up to its competitors.

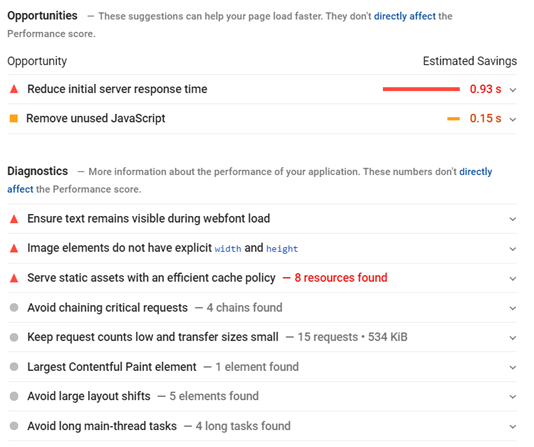

Opportunities and Diagnostics

When reading the results from a PSI test, it is important not to get too hung up on the scoring and instead try to focus on the raw timings, as well as what is in the Opportunities and Diagnostics sections.

Google point out that these do not directly impact score results, but they may indirectly affect them. For example:

- Correcting an error in your code can improve your best practices score

- Setting absolute widths and heights on your images can prevent layout shift

- Removing unused JavaScript from your code can decrease the amount of time spent downloading and processing it

Both sections will give you a list of things to check — while it says improving them won’t directly affecting performance ranking, we know that they can have some impact on user experience. It is important to note that while some things can be changed by our customers and partners, some things will require changes to NetSuite.

The Industry’s Perspective

When testing your site, it is important to keep in mind that this testing should not be performed in a vacuum. Most importantly, perhaps, is to remember that page performance will be used as one of many differentiators between similarly ranked sites; in other words, if your competitors scores are the same as yours, performance is unlikely to be a valuable ranking differentiator.

Indeed, going beyond your own site as well your competitors’ sites, it is also valuable to consider what is going on across the entire ecommerce ecosystem. When we looked at the scoring differences between a simple reference site and complex web store, we saw that the gap was quite significant. But how common is this?

Well, when we took a wider perspective, looking across the entire ecommerce industry, a picture emerged that indicated that it was actually very common. If having an overall score greater than 90 is considered good, then it seems like no web stores are considered good by Google.

To back up this assertion, there is some anecdotal evidence you can gather yourself: next time you visit a web store, put its pages into PSI and run mobile tests on it — the results may (or may not) surprise you.

But there are also people who have spent time digging into this and testing thousands of sites. There are people whose job it is to assess and optimize slow sites, and who struggle to find any site that performs well by Google’s definitions.

The key takeaway from this is that if this algorithm change is a coming storm, then all web store owners are finding themselves in its path.

Shopify

Shopify is an ecommerce platform that, given its size and popularity, means that it’s easier than others to find public data, analysis and sentiment on how well it performs in PageSpeed Insights.

Darjan Hren is a freelance ecommerce consultant with experience optimizing Shopify sites. He tested 1000 Shopify web stores using Lighthouse and produced a blog post with some interesting insights. One is that no site he tested scored above the ‘good’ threshold of 90, but the most important one is:

“I’ve analysed the top 1000 Shopify stores … the average Lighthouse performance score is 27. These are not some one man drop shipping stores but big brands making multi-million revenue.”

In other words, there is evidence that backs up the opinion that ecommerce sites are very difficult to optimize to the high standards that Google demands. If your site scores above 27 in Lighthouse, then you can say you’re above the average of Shopify’s top 1000 sites.

Another Shopify expert, Joe Lannen, founder and CTO of SpeedBoostr, a Shopify site optimization service, published a case study that showed that high PSI scores don’t always equal page load speed. Again, it makes for interesting reading, but there is one key quote worth pulling out:

“Shopify servers are fast, and professional themes are usually well built, yet I don’t think I’ve ever seen a Shopify site score high in both mobile and desktop on Google PSI.”

Finally, we have anecdotal evidence but, given its source, means it’s worth regarding. Helen Lin is a web development manager for Shopify and, writing in a blog post not too dissimilar from this one, she says:

“What exactly is a realistic Google Lighthouse score? … In the development of a site, I can get scores of 50 to 60. In production, my scores usually land around 30, which makes me cringe — trust me, I don’t like it either.”

With all of this said, this is not an attack on Shopify — instead, their situation is used to echo one you might yourself in. It is worth remembering that running a fully featured web store will have a performance impact, and that this is something happening throughout the industry.

Amazon

While researching for industry insights, I came across one that was made in reference to Shopify but was about Amazon. It comes from Igor Grigorik who, at the time, was Director of Developer Relations at Google.

Responding to a commenter who found it odd that a site as a big as Amazon was scoring so poorly despite (to them) seeming perfectly usable:

“Amazon is a good example to consider: the PSI score may not be as high as you might expect, but real-world experience observed by users is actually really good and far above most other sites. Which is to say, Amazon is definitely not ignoring performance, it’s just that the assumptions in the synthetic test executed by PSI may not align well with Amazon(.com’s) user population.”

The point he makes is that if you put a page from Amazon into the testing tools, it will come back with a score in line with the rest of the industry. But does that mean that Amazon is neglecting performance? No, of course not. In fact, if you try out their site yourself as a casual user using a mobile device, you will likely find that their site’s performance is just fine. The results you get from an artificial PSI test may not be indicative of your page’s actual performance.

So what’s going on? Could it be that PageSpeed Insights / Lighthouse is not a good at assessing the performance of ecommerce sites?

Considerations and Criticisms of PageSpeed Insights and Lighthouse

In short: Lighthouse and the services that wrap it, like PageSpeed Insights, are not great when it comes to:

- Providing a wide variety of performance metrics

- Assessing complex sites, such as web stores

- Simulating mobile tests that reliably replicate real-world data

There are also concerns around using the tests to draw conclusions and as the basis for decision-making. Let’s look into this more.

The Use of Simple Scoring

One of the things that PageSpeed Insights does is put scores front and center. If you’re a non-technical person, or just someone new to performance testing, then it would be understandable to get caught up with what the numbers are without looking at why those numbers have come up.

Scores like this could be good for headlines or executive summaries, but barely scratch the surface of performance and user experience. But if a web store owner see those numbers, and considers them to be poor, then it could lead to anxiety and obsession around trying to reach 90, 95, 100. Given how complex web stores are, and the limitations that ecommerce platforms might put on its customers’ sites, scores this high are not realistic.

Bias Towards First-Load Performance

Google, admirably, have set out to measure and test loading performance as fast loading times improve a user’s experience. However, they are only focusing on the first-load and not anything afterwards.

This bias can penalize sites that optimize loading performance for the entire user journey, not just the first time a page loads. For example, single-page applications front-load a lot of resources so that the first time a user visits a page, the first loading and rendering process is quite CPU and network intensive. However, when a user navigates around the site, subsequent page loads are much smaller because a lot of content is reused. In this scenario, Google would say that these pages provide a poor user experience when, in truth, the opposite is the case.

Layer0, a company that specializes in speeding up websites, analyzed how Core Web Vitals and single-page applications interact. Their summary is that Google’s performance measurement tools penalize SPAs. They agree with our perspective that SPAs usually improve performance, and that Google’s tools don’t measure this.

The blog post’s author, Lauren Bradley notes:

“The primary benefit of a SPA is that subsequent pages don’t reload during navigation, making for a fast, frictionless browsing session. This is exactly what Google has been trying to emphasize for over a decade. However with CrUX [Chrome User Experience Report] only measuring first-page loads, the fastest web applications are getting penalized in most of Google’s speed measurement tools.”

Tests are Performed on a Per-Page Basis

This is not a criticism, but something you need to be aware of: when using the testing tools, keep in mind that you are performing an individual test at specific point in time on an individual webpage.

This has a number of implications:

- Network lag or high CPU usage at the time of the test could impact results

- Some pages might perform faster than others

- Some resources may be cached or not

And so on. In other words, if you want to go beyond ad hoc snapshots and draw conclusions from these tests then you should put in place measures to perform test rigorously such as:

- Controlling for variance in network conditions, CPU usage and other factors

- Performing multiple tests on the same page and then choosing the median result

- Testing many different areas of site including the homepage, PLPs, PDPs, login page, static content pages, etc

Google have documented other forms for variability and how to control for them.

Mobile Tests are Synthetic

To be fair, Google has been clear that only real-world data will be used in ranking decisions. However, in the absence of that real data, ‘lab’ data can be synthesized from running tests. Anecdotally speaking, there is usually a large discrepancy in the results from the artificial tests and the real ones. In short, this means that they are not particularly representative.

Many people who see results from these synthetic tests react unduly anxiously. They are there to provide indicators and fill the void if real user data doesn’t exist.

Mobile is Not For Everyone

Google’s preference for mobile-first represents a trend shift throughout their entire ecosystem. However, that trend may not represent the visitors to particular sites.

In other words, if you operate a web store where the majority of your customers are using desktop devices to visit your site, then it is doesn’t make sense for Google to judge you to the higher standards that mobile devices and network conditions require. By extension, it doesn’t seem fair to be judged on scores derived from mobile tests.

Other Metrics are Important for Ecommerce

As mentioned in a previous section, there are other things we can judge performance on. Furthermore, when it comes to ecommerce and web stores specifically, there are other metrics that can be used to measure how good a user experience’s is that go beyond simple first-load performance.

Consider also measuring the performance of:

- Returning results after performing a keyword search

- Filtering results when using faceted navigation

- Navigating around a category structure

- Adding an item to the cart

- Logging in

- Browsing order history

- Progressing through the order wizard

- Hitting the order submit and seeing the confirmation page

- Completing the entire happy path of the user journey

Furthermore, when it comes to running a successful ecommerce business, there are many metrics that you need to account for when judging success:

- Page views

- Unique visitors

- Average order size

- Average order value

- Conversion rate

- Card abandonment rate

- Refund and return rate

- Repeat customer rate

And many more. Many businesses use these in their key performance indicators (KPIs).

Sometimes a Better User Experiences Means Slower Performance

Finally, as a thought, consider that giving a customer a good experience can often mean taking a performance hit. Google has set the limit of ‘good’ LCP at 2.5 seconds, which means that as long as your site does its largest contentful paint within that time, then you’re good. Accordingly, you could consider this a ‘budget’ where you have 2.5s to ‘spend’.

A naive interpretation of Google’s benchmarks would suggest that cutting out all the things that can increase this time would be a good thing to do just so that you can offer what Google considers a good user experience. Accordingly, this could lead you to cutting out things from your site that people consider standard and actually improve a better shopping experience.

But consider some things that have a high performance cost but could greatly improve customer experience and boost your ecommerce KPIs:

- Large images and beautiful (but computationally expensive) design choices

- Product reviews

- Multiple, high-quality images

- Live chat

- Embedded video

- Extensions and plugins

I suppose Google would say, “You can have all of those things and still be performant” and that may well be true. Indeed, there are optimization techniques for all of these things. But, they will have an impact and, cumulatively, could end up causing you to overspend on your performance budget.

So, what do you do? Offer what you think is a better UX to your shoppers or conform to a budget that Google says offers a great UX? It’s up to you to decide.

Key Takeaways

If you’re looking to make changes to your web store to improve your SEO, there are some things you can do — not all of them are related to the performance score you’ll get back from PageSpeed Insights!

Take a Breath

Metaphorically, but also literally.

There are some people who see these changes as a cause of panic, but there is no good reason to be alarmed. Google have said:

- The changes will be gentle and gradual

- Sites should not expect drastic changes

- Lab simulated / non-human data is not used in ranking decisions

Google makes modifications to its algorithm regularly.

SEO Fundamentals and Content are Supreme

The age-old SEO adage imploring web site owners to ensure that they offer great content to users (and search engines) is still true. This is another thing that Google has said very clearly — if your site offers the most relevant results to its users, then they will prefer your site over others. Simply having a fast site (by Google’s standards) is not enough to suddenly leap up the rankings for search terms that are irrelevant to the user’s search.

Once you have that content, ensuring that it is optimized for discovery and indexing search engine crawlers is the next step. Similarly, performance optimizations techniques and ideas have been developed and improved for decades — there’s always plenty to do to improve your search rankings that don’t require you to focus on results from PageSpeed Insights.

These are topics that are widely discussed on the internet and we have some additional information in our Performance section.

Lighthouse and PageSpeed Insights are Not Calibrated for Web Stores

If you do use Google’s performance testing tools, keep in mind that they are designed to be agnostic of technology, architecture, content and purpose.

A site like a developer portal can score over 90 because it has an uncomplicated codebase and a simple function; web stores are, by comparison, much more complex and offer a wide range of dynamic and powerful features. It is only natural that web stores have lower performance scores.

When you look across the ecommerce ecosystem, keep in mind that the average web store has a score of 30-40. Indeed, when you look for web stores that meet all of Google’s benchmarks for ‘good’ performance, keep in mind that anything that meets this high bar is extremely rare, and may be non-existent.

First-Load Performance Metrics are Not Representative of All Performance

As mentioned above, the things Google tests for certainly are useful things to help measure user experience, but they are not the be-all or end-all of performance testing.

From a generic web site performance testing perspective, there are many other measures that can be employed to get a good insight into how your web store is performing. For example, take a look at how you can Get Started with WebPagetest to Test Your Site’s Performance — this tool can be used to produce performance metrics of a user’s entire journey throughout your site.

From a web store business point of view, there are many other metrics that can measure the effectiveness of your web store (which has implications and connections to performance and user experience). Setting your business some goals and KPIs can be a really good idea to keep your web store on track.

Compare Against Your Competitors

One of the key things to remember is that this algorithm change is used to differentiate sites that would otherwise be indistinguishable in rank. Accordingly, when testing your site, also test your competitors!

The reason for this is that it puts any score you have in context. Some web store owners may be disappointed or worried about their score, but then find that their competitors’ scores are worse than theirs.

And by competitors, it is important to keep in mind that this doesn’t necessarily mean the sites/pages you think are your competitors — look which search terms are most popular for you and look at the people who also turn up in those search results. From Google’s point of view, they’re the ones you’re really competing against.

Some Architecture Doesn’t Score Well

If your web store uses a single-page application then this is not accounted for by the tests. As SPAs prioritize performance and user experience across the entire user journey, they will take a hit when it comes to testing first-page performance, unfortunately. Accordingly, you shouldn’t see this as Google disapproving of this technology or an indication that it is bad for performance.

And when assessing what things you can change on your site to improve performance, keep in mind that there will be some architecture decisions built into your web store that are out of your control. Even if you can identify certain improvement opportunities for your site, you may find that you (as a web store owner or developer) simply won’t be able to make changes to them because they are built into the application you’re using.

Real User Data is Always Better than Artificial ‘Lab’ Data

When you run a test in PageSpeed Insights, you will be shown data from real users if it is available. You should always prefer that over the simulated data from tests for two simple reasons:

- It comes from actual people who have visited your site using a mobile device

- It is the only data that Google takes into account when making ranking decisions

The tests performed by Lighthouse, and the scores and metrics it provides, are to be considered guides. Perhaps more specifically, they are guides for developers to make improvements for a site.

While PageSpeed Insights is accessible and easy to use by non-technical folk, they should be aware that there is a lot going on ‘under the hood’ that account for those numbers.

Final Thoughts

Google’s upcoming changes to their algorithm has led many to believe that they could lead to seismic change in rankings. The reality, however, suggest otherwise. While we don’t know what’s going on behind the scenes in Google, or what exactly the changes will look like, the indications look like the page experience factors Google has identified will play a small part in the rankings of websites.

Business users can focus their efforts on checking the quality of the product descriptions and titles, and ensuring that all the backend SEO and performance features of their site are accounted for. For example:

- Update your product descriptions to include keywords that people search for

- Enable and verify CDN caching

- Enable and verify image resizing and optimization

- Audit third-party integrations and plugins and remove any that are not needed

- Deactivate any unused features

Developers can get more forensic and check things like:

- Script performance and optimization

- Critical path performance testing

- JSON-LD/microdata is correct and accurate

- Keep up to date on the latest SEO and performance information from around the web

There’s always work that can be done to test and optimize for search engine rankings. This algorithm update is a good a time as any to get stuck in.

Code samples are licensed under the Universal Permissive License (UPL), and may rely on Third-Party Licenses